(AEC),= U.S. federal civilian agency established by the Atomic Energy Act, which was signed into law by President Harry S. Truman on Aug. 1, 1946, to control the development and production of nuclear weapons and to direct the research and development of peaceful uses of nuclear energy. On Dec. 31, 1946, the AEC succeeded the Manhattan Engineer District of the U.S. Army Corps of Engineers (which had developed the atomic bomb during World War II) and thus officially took control of the nation's nuclear program.

A nuclear explosion releases energy in a variety of forms, including blast, heat, and radiation (X rays, gamma rays, and neutrons). By varying a weapon's design, these effects could be tailored for a specific military purpose. In an enhanced-radiation weapon, more commonly called a neutron bomb, the objective was to minimize the blast by reducing the fission yield and to enhance the neutron radiation. Such a weapon would prove lethal to invading troops without, it was hoped, destroying the defending country's towns and countryside. It was actually a small (on the order of one kiloton), two-stage thermonuclear weapon that utilized deuterium and tritium, rather than lithium deuteride, to maximize the release of fast neutrons. The first U.S. application of this principle was an antiballistic missile warhead in the mid-1970s. Enhanced-radiation warheads were produced for the Lance short-range ballistic missile and for an eight-inch artillery shell.

Though it had virtually created the American nuclear-power industry, the AEC also had to regulate that industry to ensure public health and safety and to safeguard national security. Because these dual roles often conflicted with each other, the U.S. government under the Energy Reorganization Act of 1974 disbanded the AEC and divided its functions between two new agencies: the Nuclear Regulatory Commission (q.v.), which regulates the nuclear-power industry; and the Energy Research and Development Administration, which was disbanded in 1977 when the Department of Energy was created.

"""""autonomous intergovernmental organization dedicated to increasing the contribution of atomic energy to the world's peace and well-being and ensuring that agency assistance is not used for military purposes. The IAEA and its director general, Mohamed ElBaradei, won the Nobel Prize for Peace in 2005.

The agency was established by representatives of more than 80 countries in October 1956, nearly three years after U.S. President Dwight D. Eisenhower's “Atoms for Peace” speech to the United Nations General Assembly, in which Eisenhower called for the creation of an international organization for monitoring the diffusion of nuclear resources and technology. The IAEA's statute officially came into force on July 29, 1957. Its activities include research on the applications of atomic energy to medicine, agriculture, water resources, and industry; the operation of conferences, training programs, fellowships, and publications to promote the exchange of technical information and skills; the provision of technical assistance, especially to less-developed countries; and the establishment and administration of radiation safeguards. As part of the Treaty on the Non-Proliferation of Nuclear Weapons (1968), all non-nuclear powers are required to negotiate a safeguards agreement with the IAEA; as part of that agreement, the IAEA is given authority to monitor nuclear programs and to inspect nuclear facilities.

The General Conference, consisting of all members (in the early 21st century some 135 countries were members), meets annually to approve the budget and programs and to debate the IAEA's general policies; it also is responsible for approving the appointment of a director general and admitting new members. The Board of Governors, which consists of 35 members who meet about five times per year, is charged with carrying out the agency's statutory functions, approving safeguards agreements, and appointing the director general. The day-to-day affairs of the IAEA are run by the Secretariat, which is headed by the director general, who is assisted by six deputies; the Secretariat's departments include nuclear energy, nuclear safety, nuclear sciences and application, safeguards, and technical cooperation. Headquarters are in Vienna.

"""'''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''''

also called atomic weapon , or thermonuclear weapon bomb or other warhead that derives its force from either the fission or the fusion of atomic nuclei and is delivered by an aircraft, missile, Earth satellite, or other strategic delivery system.

Nuclear weapons have enormous explosive force. Their significance may best be appreciated by the coining of the words kiloton (1,000 tons) and megaton (one million tons) to describe their blast effect in equivalent weights of TNT. For example, the first nuclear fission bomb, the one dropped on Hiroshima, Japan, in 1945, released energy equaling 15,000 tons (15 kilotons) of chemical explosive from less than 130 pounds (60 kilograms) of uranium. Fusion bombs, on the other hand, have given yields up to almost 60 megatons.

The first nuclear weapons were bombs delivered by aircraft; warheads for strategic ballistic missiles, however, have become by far the most important nuclear weapons. There are also smaller tactical nuclear weapons that include artillery projectiles, demolition munitions (land mines), antisubmarine depth bombs, torpedoes, and short-range ballistic and cruise missiles. The U.S. stockpile of nuclear weapons reached its peak in 1967 with more than 32,000 warheads of 30 different types; the Soviet stockpile reached its peak of about 33,000 warheads in 1988.

The basic principle of nuclear fission weapons (also called atomic bombs) involves the assembly of a sufficient amount of fissile material (e.g., the uranium isotope uranium-235 or the plutonium isotope plutonium-239) to “go supercritical”—that is, for neutrons (which cause fission and are in turn released during fission) to be produced at a much faster rate than they can escape from the assembly. There are two ways in which a subcritical assembly of fissionable material can be rendered supercritical and made to explode. The subcritical assembly may consist of two parts, each of which is too small to have a positive multiplication rate; the two parts can be shot together by a gun-type device. Alternatively, a subcritical assembly surrounded by a chemical high explosive may be compressed into a supercritical one by detonating the explosive.

The basic principle of the fusion weapon (also called the thermonuclear or hydrogen bomb) is to produce ignition conditions in a thermonuclear fuel such as deuterium, an isotope of hydrogen with double the weight of normal hydrogen, or lithium deuteride. The Sun may be considered a thermonuclear device; its main fuel is deuterium, which it consumes in its core at temperatures of 18,000,000° to 36,000,000° F (10,000,000° to 20,000,000° C). To achieve comparable temperatures in a weapon, a fission triggering device is used.

Following the discovery of artificial radioactivity in the 1930s, the Italian physicist Enrico Fermi performed a series of experiments in which he exposed many elements to low-velocity neutrons. When he exposed thorium and uranium, chemically different radioactive products resulted, indicating that new elements had been formed, rather than merely isotopes of the original elements. Fermi concluded that he had produced elements beyond uranium (element 92), then the last element in the periodic table; he called them transuranic elements and named two of them ausenium (element 93) and hesperium (element 94). During the autumn of 1938, however, when Fermi was receiving the Nobel Prize for his work, Otto Hahn and Fritz Strassmann of Germany discovered that one of the “new” elements was actually barium (element 56).

The Danish scientist Niels Bohr visited the United States in January 1939, carrying with him an explanation, devised by the Austrian refugee scientist Lise Meitner and her nephew Otto Frisch, of the process behind Hahn's surprising data. Low-velocity neutrons caused the uranium nucleus to fission, or break apart, into two smaller pieces; the combined atomic numbers of the two pieces—for example, barium and krypton—equalled that of the uranium nucleus. Much energy was released in the process. This news set off experiments at many laboratories. Bohr worked with John Wheeler at Princeton; they postulated that the uranium isotope uranium-235 was the one undergoing fission; the other isotope, uranium-238, merely absorbed the neutrons. It was discovered that neutrons were produced during the fission process; on the average, each fissioning atom produced more than two neutrons. If the proper amount of material were assembled, these free neutrons might create a chain reaction. Under special conditions, a very fast chain reaction might produce a very large release of energy; in short, a weapon of fantastic power might be feasible.

The possibility that such a weapon might first be developed by Nazi Germany alarmed many scientists and was drawn to the attention of President Franklin D. Roosevelt by Albert Einstein, then living in the United States. The president appointed an Advisory Committee on Uranium; it reported that a chain reaction in uranium was possible, though unproved. Chain-reaction experiments with carbon and uranium were started in New York City at Columbia University, and in March 1940 it was confirmed that the isotope uranium-235 was responsible for low-velocity neutron fission in uranium. The Advisory Committee on Uranium increased its support of the Columbia experiments and arranged for a study of possible methods for separating the uranium-235 isotope from the much more abundant uranium-238. (Normal uranium contains approximately 0.7 percent uranium-235, most of the remainder being uranium-238.) The centrifuge process, in which the heavier isotope is spun to the outside, as in a cream separator, at first seemed the most useful, but at Columbia a rival process was proposed. In that process, gaseous uranium hexafluoride is diffused through barriers, or filters; more molecules containing the lighter isotope, uranium-235, would pass through the filter than those containing the heavier isotope, slightly enriching the mixture on the far side. A sequence of several thousand stages would be needed to enrich the mixture to 90 percent uranium-235; the total barrier area would be many acres.

During the summer of 1940, Edwin McMillan and Philip Abelson of the University of California at Berkeley discovered element 93, named neptunium; they inferred that this element would decay into element 94. The Bohr and Wheeler fission theory suggested that one of the isotopes, mass number 239, of this new element might also fission under low-velocity neutron bombardment. The cyclotron at the University of California at Berkeley was put to work to make enough element 94 for experiments; by mid-1941, element 94 had been firmly identified and named plutonium, and its fission characteristics had been established. Low-velocity neutrons did indeed cause it to undergo fission, and at a rate much higher than that of uranium-235. The Berkeley group, under Ernest Lawrence, was also considering producing large quantities of uranium-235 by turning one of their cyclotrons into a super mass spectrograph. A mass spectrograph employs a magnetic field to bend a current of uranium ions; the heavier ions (uranium-238) bend at a larger radius than the lighter ions (uranium-235), allowing the two separated currents to be collected in separate receivers.

In May 1941 a review committee reported that a nuclear explosive probably could not be available before 1945, that a chain reaction in natural uranium was probably 18 months off, and that it would take at least an additional year to produce enough plutonium for a bomb and three to five years to separate enough uranium-235. Further, it was held that all of these estimates were optimistic. In late June 1941 President Roosevelt established the Office of Scientific Research and Development under the direction of the scientist Vannevar Bush.

In the fall of 1941 the Columbia chain-reaction experiment with natural uranium and carbon yielded negative results. A review committee concluded that boron impurities might be poisoning it by absorbing neutrons. It was decided to transfer all such work to the University of Chicago and repeat the experiment there with high-purity carbon. At Berkeley, the cyclotron, converted into a mass spectrograph (later called a calutron), was exceeding expectations in separating uranium-235, and it was enlarged to a 10-calutron system capable of producing one-tenth of an ounce (about three grams) of uranium-235 per day.

The U.S. entry into World War II in December 1941 was decisive in providing funds for a massive research and production effort for obtaining fissionable materials, and in May 1942 the momentous decision was made to proceed simultaneously on all promising production methods. Bush decided that the army should be brought into the production plant construction activities. The Corps of Engineers opened an office in New York City and named it the Manhattan Engineer District Office. After considerable argument over priorities, a workable arrangement was achieved with the formation of a three-man policy board chaired by Bush and the appointment on September 17 of Colonel Leslie Groves as head of the Manhattan Engineer District. Groves arranged contracts for a gaseous diffusion separation plant, a plutonium production facility, and a calutron pilot plant, which might be expanded later. The day before the success of Fermi's chain-reaction experiment at the University of Chicago on Dec. 2, 1942, Groves (now a brigadier general) signed the construction contract for the plutonium production reactors. Many problems were still unsolved, however. First, the gaseous diffusion barrier had not yet been demonstrated as practical. Second, Berkeley had been successful with its empirically designed calutron, but the Oak Ridge pilot plant contractors were understandably uneasy about the rough specifications available for the massive separation of uranium-235, which was designated the Y-12 effort. Third, plutonium chemistry was almost unknown; in fact, it was not known whether or not plutonium gave off neutrons during fission, or, if so, how many.

Meantime, as part of the June 1942 reorganization, J. Robert Oppenheimer became, in October, the director of Project Y, the group that was to design the actual weapon. This effort was spread over several locations. On November 16 Groves and Oppenheimer visited the former Los Alamos Ranch School, some 60 miles (100 kilometres) north of Albuquerque, N.M., and on November 25 Groves approved it as the site for the Los Alamos Scientific Laboratory. By July two essential and encouraging pieces of experimental data had been obtained—plutonium did give off neutrons in fission, more than uranium-235; and the neutrons were emitted in a short time compared to that needed to bring the weapon materials into a supercritical assembly. The theorists contributed one discouraging note: their estimate of the critical mass for uranium-235 had risen over threefold, to something between 50 and 100 pounds.

The emphasis during the summer and fall of 1943 was on the gun method of assembly, in which the projectile, a subcritical piece of uranium-235 (or plutonium-239), would be placed in a gun barrel and fired into the target, another subcritical piece of uranium-235. After the mass was joined (and now supercritical), a neutron source would be used to start the chain reaction. A problem developed with applying the gun method to plutonium, however. In manufacturing plutonium-239 from uranium-238 in a reactor, some of the plutonium-239 absorbs a neutron and becomes plutonium-240. This material undergoes spontaneous fission, producing neutrons. Some neutrons will always be present in a plutonium assembly and cause it to begin multiplying as soon as it goes critical, before it reaches supercriticality; it will then explode prematurely and produce comparatively little energy. The gun designers tried to beat this problem by achieving higher projectile speeds, but they lost out in the end to a better idea—the implosion method.

In April 1943 a Project Y physicist, Seth Neddermeyer, proposed to assemble a supercritical mass from many directions, instead of just two as in the gun. In particular, a number of shaped charges placed on the surface of a sphere would fire many subcritical pieces into one common ball at the centre of the sphere. John von Neumann, a mathematician who had had experience in shaped-charge, armour-piercing work, supported the implosion method enthusiastically and pointed out that the greater speed of assembly might solve the plutonium-240 problem. The physicist Edward Teller suggested that the converging material might also become compressed, offering the possibility that less material would be needed. By late 1943 the implosion method was being given an increasingly higher priority; by July 1944 it had become clear that the plutonium gun could not be built. The only way to use plutonium in a weapon was by the implosion method.

By 1944 the Manhattan Project was spending money at a rate of more than $1 billion per year. The situation was likened to a nightmarish horse race; no one could say which of the horses (the calutron plant, the diffusion plant, or the plutonium reactors) was likely to win or whether any of them would even finish the race. In July 1944 the first Y-12 calutrons had been running for three months but were operating at less than 50 percent efficiency; the main problem was in recovering the large amounts of material that reached neither the uranium-235 nor uranium-238 boxes and, thus, had to be rerun through the system. The gaseous diffusion plant was far from completion, the production of satisfactory barriers remaining the major problem. And the first plutonium reactor at Hanford, Wash., had been turned on in September, but it had promptly turned itself off. Solving this problem, which proved to be caused by absorption of neutrons by one of the fission products, took several months. These delays meant almost certainly that the war in Europe would be over before the weapon could be ready. The ultimate target was slowly changing from Germany to Japan.

Within 24 hours of Roosevelt's death on April 12, 1945, President Harry S. Truman was told briefly about the atomic bomb by Secretary of War Henry Stimson. On April 25 Stimson, with Groves's assistance, gave Truman a more extensive briefing on the status of the project: the uranium-235 gun design had been frozen, but sufficient uranium-235 would not be accumulated until around August 1. Enough plutonium-239 would be available for an implosion assembly to be tested in early July; a second would be ready in August. Several B-29s had been modified to carry the weapons, and support construction was under way at Tinian, in the Mariana Islands, 1,500 miles south of Japan.

The test of the plutonium weapon was named Trinity; it was fired at 5:29:45 AM (local time) on July 16, 1945, at the Alamogordo Bombing Range in south central New Mexico. The theorists' predictions of the energy release ranged from the equivalent of less than 1,000 tons of TNT to 45,000 tons. The test produced an energy, or yield, equivalent to 21,000 tons of TNT.

A single B-29 bomber, named the Enola Gay, flew over Hiroshima, Japan, on Monday, Aug. 6, 1945, at 8:15 in the morning, local time. The untested uranium-235 gun-assembly bomb, nicknamed Little Boy, was air-burst 1,900 feet (680 metres) above the city to maximize destruction. Two-thirds of the city area was destroyed. The population actually present at the time was estimated at 350,000; of these, 140,000 died by the end of the year. The second weapon, a duplicate of the plutonium-239 implosion assembly tested in Trinity and nicknamed Fat Man, was to be dropped on Kokura on August 11; a third was being prepared in the United States for possible use in late August or early September. To avoid bad weather, the schedule was moved up two days to August 9. The B-29, named Bock's Car, spent 10 minutes over Kokura without sighting its aim point; it then proceeded to the secondary target of Nagasaki, where, at 11:02 AM local time, the weapon was air-burst at 1,650 feet with a force later estimated at 21 kilotons. About half the city was destroyed, and, of the estimated 270,000 people present at the time, about 70,000 died by the end of the year.

Scientists in several countries performed experiments in connection with nuclear reactors and fission weapons during World War II, but no country other than the United States carried its projects as far as separating uranium-235 or manufacturing plutonium-239.

By the time the war began on Sept. 1, 1939, Germany had a special office for the military application of nuclear fission; chain-reaction experiments with uranium and carbon were being planned, and ways of separating the uranium isotopes were under study. Some measurements on carbon, later shown to be in error, led the physicist Werner Heisenberg to recommend that heavy water be used, instead, for the moderator. This dependence on scarce heavy water was a major reason the German experiments never reached a successful conclusion. The isotope separation studies were oriented toward low enrichments (about 1 percent uranium-235) for the chain reaction experiments; they never got past the laboratory apparatus stage, and several times these prototypes were destroyed in bombing attacks. As for the fission weapon itself, it was a rather distant goal, and practically nothing but “back-of-the-envelope” studies were done on it.

Like their counterparts elsewhere, Japanese scientists initiated research on an atomic bomb. In December 1940, Japan's leading scientist, Nishina Yoshio, undertook a small-scale research effort supported by the armed forces. It did not progress beyond the laboratory owing to lack of government support, resources, and uranium.

The British weapon project started informally, as in the United States, among university physicists. In April 1940 a short paper by Otto Frisch and Rudolf Peierls, expanding on the idea of critical mass, estimated that a superweapon could be built using several pounds of pure uranium-235 and that this amount of material might be obtainable from a chain of diffusion tubes. This three-page memorandum was the first report to foretell with scientific conviction the practical possibility of making a bomb and the horrors it would bring. A group of scientists known as the MAUD committee was set up in the Ministry of Aircraft Production in April 1940 to decide if a uranium bomb could be made. The committee approved a report on July 15, 1941, concluding that the scheme for a uranium bomb was practicable, that work should continue on the highest priority, and that collaboration with the Americans should be continued and expanded. As the war took its toll on the economy, the British position evolved through 1942 and 1943 to one of full support for the American project with the realization that Britain's major effort would come after the war. While the British program was sharply reduced at home, approximately 50 scientists and engineers went to the United States at the end of 1943 and during 1944 to work on various aspects of the Manhattan Project. The valuable knowledge and experience they acquired sped the development of the British bomb after 1945.

The formal postwar decision to manufacture a British atomic bomb was made by Prime Minister Clement Attlee's government during a meeting of the Defence Subcommittee of the Cabinet in early January 1947. The construction of a first reactor to produce fissile material and associated facilities had gotten under way the year before. William Penney, a member of the British team at Los Alamos during the war, was placed in charge of fabricating and testing the bomb, which was to be of a plutonium type similar to the one dropped on Nagasaki. That Britain was developing nuclear weapons was not made public until Feb. 17, 1952, when Prime Minister Winston Churchill declared plans to test the first British-made atomic bomb at the Monte Bello Islands off the northwest coast of Australia; Churchill made the official announcement in a speech before the House of Commons on February 26, at which time he also reported that the country had the manufacturing infrastructure to insure regular production of the bomb. On Oct. 3, 1952, the first British atomic weapons test, called Hurricane, was successfully conducted aboard the frigate HMS Plym. By early 1954 Royal Air Force Canberra bombers were armed with atomic bombs.

In the decade before the war, Soviet physicists were actively engaged in nuclear and atomic research. By 1939 they had established that, once uranium has been fissioned, each nucleus emits neutrons and can therefore, at least in theory, begin a chain reaction. The following year, physicists concluded that such a chain reaction could be ignited in either natural uranium or its isotope, uranium-235, and that this reaction could be sustained and controlled with a moderator such as heavy water. In June 1940 the Soviet Academy of Sciences established the Uranium Commission to study the “uranium problem.”

In February 1939, news had reached Soviet physicists of the discovery of nuclear fission in the West. The military implications of such a discovery were immediately apparent, but Soviet research was brought to a halt by the German invasion in June 1941. In early 1942 the physicist Georgy N. Flerov noticed that articles on nuclear fission were no longer appearing in western journals; this indicated that research on the subject had become secret. In response, Flerov wrote to, among others, Premier Joseph Stalin, insisting that “we must build the uranium bomb without delay.” In 1943 Stalin ordered the commencement of a research project under the supervision of Igor V. Kurchatov, who had been director of the nuclear physics laboratory at the Physico-Technical Institute in Leningrad. Kurchatov initiated work on three fronts: achieving a chain reaction in a uranium pile, designing both uranium-235 and plutonium bombs, and separating isotopes from these materials.

By the end of 1944, 100 scientists were working under Kurchatov, and by the time of the Potsdam Conference, which brought the Allied leaders together the day after the Trinity test, the project on the atomic bomb was seriously under way. During one session at the conference, Truman remarked to Stalin that the United States had built a “new weapon of unusual destructive force.” Stalin replied that he would like to see the United States make “good use of it against the Japanese.”

Upon his return from Potsdam, Stalin ordered that work on the fission bomb proceed at a faster pace. On Aug. 7, 1945, the day after the bombing of Hiroshima, he placed Lavrenty P. Beria, the chief of secret police, in charge of the Soviet version of the Manhattan Project. The first Soviet chain reaction took place in Moscow on Dec. 25, 1946, using an experimental graphite-moderated natural uranium pile, and the first plutonium production reactor became operational at Kyshtym, in the Ural Mountains, on June 19, 1948. The first Soviet weapon test occurred on Aug. 29, 1949, using plutonium; it had a yield of 10 to 20 kilotons.

French scientists, such as Henri Becquerel, Marie and Pierre Curie, and Frédéric and Irène Joliot-Curie, made important contributions to 20th-century atomic physics. During World War II several French scientists participated in an Anglo-Canadian project in Canada, where eventually a heavy water reactor was built at Chalk River, Ont., in 1945.

On Oct. 18, 1945, the Atomic Energy Commission (Commissariat à l'Énergie Atomique; CEA) was established by General Charles de Gaulle with the objective of exploiting the scientific, industrial, and military potential of atomic energy. The military application of atomic energy did not begin until 1951. In July 1952 the National Assembly adopted a five-year plan, a primary goal of which was to build plutonium production reactors. Work began on a reactor at Marcoule in the summer of 1954 and on a plutonium separating plant the following year.

On Dec. 26, 1954, the issue of proceeding with a French bomb was raised at Cabinet level. The outcome was that Prime Minister Pierre Mendès-France launched a secret program to develop a bomb. On Nov. 30, 1956, a protocol was signed specifying tasks the CEA and the Defense Ministry would perform. These included providing the plutonium, assembling a device, and preparing a test site. On July 22, 1958, de Gaulle, who had resumed power as prime minister, set the date for the first atomic explosion to occur within the first three months of 1960. On Feb. 13, 1960, the French detonated their first atomic bomb from a 330-foot tower in the Sahara in what was then French Algeria.

On Jan. 15, 1955, Mao Zedong (Mao Tse-tung) and the Chinese leadership decided to obtain their own nuclear arsenal. From 1955 to 1958 the Chinese were partially dependent upon the Soviet Union for scientific and technological assistance, but from 1958 until the break in relations in 1960 they became more and more self-sufficient. Facilities were built to produce and process uranium and plutonium at the Lan-chou Gaseous Diffusion Plant and the Chiu-ch'üan Atomic Energy Complex, both in the northwestern province of Kansu. A design laboratory (called the Ninth Academy) was established at Hai-yen, east of the Koko Nor in Tsinghai province. A test site at Lop Nor, in far northwestern China, was established in October 1959. Overall leadership and direction was provided by Nie Rongzhen (Nieh Jung-chen), director of the Defense Science and Technology Commission.

Unlike the initial U.S. or Soviet tests, the first Chinese detonation—on Oct. 16, 1964—used uranium-235 in an implosion-type configuration. Plutonium was not used until the eighth explosion, on Dec. 27, 1968.

On May 18, 1974, India detonated a nuclear device in the Rājasthān desert near Pokaran with a reported yield of 15 kilotons. India characterized the test as being for peaceful purposes and apparently did not stockpile weapons. Pakistan declared its nuclear program to be solely for peaceful purposes, but it acquired the necessary infrastructure of facilities to produce weapons and was generally believed to possess them.

Several other countries were believed to have built nuclear weapons or to have acquired the capability of assembling them on short notice. Israel was believed to have built an arsenal of more than 200 weapons, including thermonuclear bombs. In August 1988 the South African foreign minister said that South Africa had “the capability to [produce a nuclear bomb] should we want to.” Argentina, Brazil, South Korea, and Taiwan also had the scientific and industrial base to develop and produce nuclear weapons, but they did not seem to have active programs.

U.S. research on thermonuclear weapons started from a conversation in September 1941 between Fermi and Teller. Fermi wondered if the explosion of a fission weapon could ignite a mass of deuterium sufficiently to begin thermonuclear fusion. (Deuterium, an isotope of hydrogen with one proton and one neutron in the nucleus—i.e., twice the normal weight—makes up 0.015 percent of natural hydrogen and can be separated in quantity by electrolysis and distillation. It exists in liquid form only below about −417° F, or −250° C.) Teller undertook to analyze the thermonuclear processes in some detail and presented his findings to a group of theoretical physicists convened by Oppenheimer in Berkeley in the summer of 1942. One participant, Emil Konopinski, suggested that the use of tritium be investigated as a thermonuclear fuel, an insight that would later be important to most designs. (Tritium, an isotope of hydrogen with one proton and two neutrons in the nucleus—i.e., three times the normal weight—does not exist in nature except in trace amounts, but it can be made by irradiating lithium in a nuclear reactor. It is radioactive and has a half-life of 12.5 years.)

As a result of these discussions the participants concluded that a weapon based on thermonuclear fusion was possible. When the Los Alamos laboratory was being planned, a small research program on the Super, as it came to be known, was included. Several conferences were held at the laboratory in late April 1943 to acquaint the new staff members with the existing state of knowledge and the direction of the research program. The consensus was that modest thermonuclear research should be pursued along theoretical lines. Teller proposed more intensive investigations, and some work did proceed, but the more urgent task of developing a fission weapon always took precedence—a necessary prerequisite for a hydrogen bomb in any event.

In the fall of 1945, after the success of the atomic bomb and the end of World War II, the future of the Manhattan Project, including Los Alamos and the other facilities, was unclear. Government funding was severely reduced, many scientists returned to universities and to their careers, and contractor companies turned to other pursuits. The Atomic Energy Act, signed by President Truman on Aug. 1, 1946, established the Atomic Energy Commission (AEC), replacing the Manhattan Engineer District, and gave it civilian authority over all aspects of atomic energy, including oversight of nuclear warhead research, development, testing, and production.

From April 18 to 20, 1946, a conference led by Teller at Los Alamos reviewed the status of the Super. At that time it was believed that a fission weapon could be used to ignite one end of a cylinder of liquid deuterium and that the resulting thermonuclear reaction would self-propagate to the other end. This conceptual design was known as the “classical Super.”

One of the two central design problems was how to ignite the thermonuclear fuel. It was recognized early on that a mixture of deuterium and tritium theoretically could be ignited at lower temperatures and would have a faster reaction time than deuterium alone, but the question of how to achieve ignition remained unresolved. The other problem, equally difficult, was whether and under what conditions burning might proceed in thermonuclear fuel once ignition had taken place. An exploding thermonuclear weapon involves many extremely complicated, interacting physical and nuclear processes. The speeds of the exploding materials can be up to millions of feet per second, temperatures and pressures are greater than those at the centre of the Sun, and time scales are billionths of a second. To resolve whether the “classical Super” or any other design would work required accurate numerical models of these processes—a formidable task, since the computers that would be needed to perform the calculations were still under development. Also, the requisite fission triggers were not yet ready, and the limited resources of Los Alamos could not support an extensive program.

On Sept. 23, 1949, Truman announced that “we have evidence that within recent weeks an atomic explosion occurred in the U.S.S.R.” This first Soviet test stimulated an intense, four-month, secret debate about whether to proceed with the hydrogen bomb project. One of the strongest statements of opposition against proceeding with a hydrogen bomb program came from the General Advisory Committee (GAC) of the AEC, chaired by Oppenheimer. In their report of Oct. 30, 1949, the majority recommended “strongly against” initiating an all-out effort, believing “that extreme dangers to mankind inherent in the proposal wholly outweigh any military advantages that could come from this development.” “A super bomb,” they went on to say, “might become a weapon of genocide.” They believed that “a super bomb should never be produced.” Nevertheless, the Joint Chiefs of Staff, the State and Defense departments, the Joint Committee on Atomic Energy, and a special subcommittee of the National Security Council all recommended proceeding with the hydrogen bomb. Truman announced on Jan. 31, 1950, that he had directed the AEC to continue its work on all forms of atomic weapons, including hydrogen bombs. In March, Los Alamos went on a six-day workweek.

In the months that followed Truman's decision, the prospect of actually being able to build a hydrogen bomb became less and less likely. The mathematician Stanislaw M. Ulam, with the assistance of Cornelius J. Everett, had undertaken calculations of the amount of tritium that would be needed for ignition of the classical Super. Their results were spectacular and, to Teller, discouraging: the amount needed was estimated to be enormous. In the summer of 1950 more detailed and thorough calculations by other members of the Los Alamos Theoretical Division confirmed Ulam's estimates. This meant that the cost of the Super program would be prohibitive.

Also in the summer of 1950, Fermi and Ulam calculated that liquid deuterium probably would not burn—that is, there would probably be no self-sustaining and propagating reaction. Barring surprises, therefore, the theoretical work to 1950 indicated that every important assumption regarding the viability of the classical Super was wrong. If success was to come, it would have to be accomplished by other means.

The other means became apparent between February and April 1951, following breakthroughs achieved at Los Alamos. One breakthrough was the recognition that the burning of thermonuclear fuel would be more efficient if a high density were achieved throughout the fuel prior to raising its temperature, rather than the classical Super approach of just raising the temperature in one area and then relying on the propagation of thermonuclear reactions to heat the remaining fuel. A second breakthrough was the recognition that these conditions—high compression and high temperature throughout the fuel—could be achieved by containing and converting the radiation from an exploding fission weapon and then using this energy to compress a separate component containing the thermonuclear fuel.

The major figures in these breakthroughs were Ulam and Teller. In December 1950 Ulam had proposed a new fission weapon design, using the mechanical shock of an ordinary fission bomb to compress to a very high density a second fissile core. (This two-stage fission device was conceived entirely independently of the thermonuclear program, its aim being to use fissionable materials more economically.) Early in 1951 Ulam went to see Teller and proposed that the two-stage approach be used to compress and ignite a thermonuclear secondary. Teller suggested radiation implosion, rather than mechanical shock, as the mechanism for compressing the thermonuclear fuel in the second stage. On March 9, 1951, Teller and Ulam presented a report containing both alternatives, entitled “On Heterocatalytic Detonations I. Hydrodynamic Lenses and Radiation Mirrors.” A second report, dated April 4, by Teller, included some extensive calculations by Frederic de Hoffmann and elaborated on how a thermonuclear bomb could be constructed. The two-stage radiation implosion design proposed by these reports, which led to the modern concept of thermonuclear weapons, became known as the Teller–Ulam configuration.

It was immediately clear to all scientists concerned that these new ideas—achieving a high density in the thermonuclear fuel by compression using a fission primary—provided for the first time a firm basis for a fusion weapon. Without hesitation, Los Alamos adopted the new program. Gordon Dean, chairman of the AEC, convened a meeting at the Institute for Advanced Study in Princeton, hosted by Oppenheimer, on June 16–18, 1951, where the new idea was discussed. In attendance were the GAC members, AEC commissioners, and key scientists and consultants from Los Alamos and Princeton. The participants were unanimously in favour of active and rapid pursuit of the Teller–Ulam principle.

Just prior to the conference, on May 8 at Enewetak atoll in the western Pacific, a test explosion called George had successfully used a fission bomb to ignite a small quantity of deuterium and tritium. The original purpose of George had been to confirm the burning of these thermonuclear fuels (about which there had never been any doubt), but with the new conceptual understanding contributed by Teller and Ulam, the test provided the bonus of successfully demonstrating radiation implosion.

In September 1951, Los Alamos proposed a test of the Teller–Ulam concept for November 1952. Engineering of the device, nicknamed Mike, began in October 1951, but unforeseen difficulties required a major redesign of the experiment in March 1952. The Mike device weighed 82 tons, owing in part to cryogenic (low-temperature) refrigeration equipment necessary to keep the deuterium in liquid form. It was successfully detonated during Operation Ivy, on Nov. 1, 1952 (local time), at Enewetak. The explosion achieved a yield of 10.4 million tons of TNT, or 500 times larger than the Nagasaki bomb, and it produced a crater 6,240 feet in diameter and 164 feet deep.

With the Teller–Ulam configuration proved, deliverable thermonuclear weapons were designed and initially tested during Operation Castle in 1954. The first test of the series, conducted on March 1, 1954 (local time), was called Bravo. It used solid lithium deuteride rather than liquid deuterium and produced a yield of 15 megatons, 1,000 times as large as the Hiroshima bomb. Here the principal thermonuclear reaction was the fusion of deuterium and tritium. The tritium was produced in the weapon itself by neutron bombardment of the lithium-6 isotope in the course of the fusion reaction. Using lithium deuteride instead of liquid deuterium eliminated the need for cumbersome cryogenic equipment.

With completion of Castle, the feasibility of lightweight, solid-fuel thermonuclear weapons was proved. Vast quantities of tritium would not be needed after all. New possibilities for adaptation of thermonuclear weapons to various kinds of missiles began to be explored.

In 1948 Kurchatov organized a theoretical group, under the supervision of physicist Igor Y. Tamm, to begin work on a fusion bomb. (This group included Andrey Sakharov, who, after contributing several important ideas to the effort, later became known as the “father of the Soviet H-bomb.”) In general, the Soviet program was two to three years behind that of the United States. The test that took place on Aug. 12, 1953, produced a fusion reaction in lithium deuteride and had a yield of 200 to 400 kilotons. This test, however, was not of a high-yield hydrogen bomb based on the Teller–Ulam configuration or something like it. The first such Soviet test, with a yield in the megaton range, took place on Nov. 22, 1955. On Oct. 30, 1961, the Soviet Union tested the largest known nuclear device, with an explosive force of 58 megatons.

Minister of Defence Harold Macmillan announced in his Statement of Defence, on Feb. 17, 1955, that the United Kingdom planned to develop and produce hydrogen bombs. The formal decision to proceed had been made earlier in secret by a small Defence subcommittee on June 16, 1954, and put to the Cabinet in July. The decision was unaccompanied by the official debate that characterized the American experience five years earlier.

It remained unclear exactly when the first British thermonuclear test occurred. Three high-yield tests in May and June 1957 near Malden Island in the Pacific Ocean were probably of boosted fission designs (see below). The most likely date for the first two-stage thermonuclear test, using the Teller–Ulam configuration or a variant, was Nov. 8, 1957. This test and three others that followed in April and September 1958 contributed novel ideas to modern thermonuclear designs.

Well before their first atomic test, the French assumed they would eventually have to become a thermonuclear power as well. The first French thermonuclear test was conducted on Aug. 24, 1968.

Plans to proceed toward a Chinese hydrogen bomb were begun in 1960, with the formation of a group by the Institute of Atomic Energy to do research on thermonuclear materials and reactions. In late 1963, after the design of the fission bomb was complete, the Theoretical Department of the Ninth Academy, under the direction of Deng Jiaxian (Teng Chia-hsien), was ordered to shift to thermonuclear work. By the end of 1965 the theoretical work for a multistage bomb had been accomplished. After testing two boosted fission devices in 1966, the first Chinese multistage fusion device was detonated on June 17, 1967.

From the late 1940s, U.S. nuclear weapon designers developed and tested warheads to improve their ballistics, to standardize designs for mass production, to increase yields, to improve yield-to-weight and yield-to-volume ratios, and to study their effects. These improvements resulted in the creation of nuclear warheads for a wide variety of strategic and tactical delivery systems.

The first advances came through the test series Operation Sandstone, conducted in the spring of 1948. These three tests used implosion designs of a second generation, which incorporated composite and levitated cores. A composite core consisted of concentric shells of both uranium-235 and plutonium-239, permitting more efficient use of these fissile materials. Higher compression of the fissile material was achieved by levitating the core—that is, introducing an air gap into the weapon to obtain a higher yield for the same amount of fissile material.

Tests during Operation Ranger in early 1951 included implosion devices with cores containing a fraction of a critical mass—a concept originated in 1944 during the Manhattan Project. Unlike the original Fat Man design, these “fractional crit” weapons relied on compressing the fissile core to a higher density in order to achieve a supercritical mass. These designs could achieve appreciable yields with less material.

One technique for enhancing the yield of a fission explosion was called “boosting.” Boosting referred to a process whereby thermonuclear reactions were used as a source of neutrons for inducing fissions at a much higher rate than could be achieved with neutrons from fission chain reactions alone. The concept was invented by Teller by the middle of 1943. By incorporating deuterium and tritium into the core of the fissile material, a higher yield could be obtained from a given quantity of fissile material—or, alternatively, the same yield could be achieved with a smaller amount. The fourth test of Operation Greenhouse, on May 24, 1951, was the first proof test of a booster design. In subsequent decades approximately 90 percent of nuclear weapons in the U.S. stockpile relied on boosting.

Refinements of the basic two-stage Teller–Ulam configuration resulted in thermonuclear weapons with a wide variety of characteristics and applications. Some high-yield deliverable weapons incorporated additional thermonuclear fuel (lithium deuteride) and fissionable material (uranium-235 and uranium-238) in a third stage. While there was no theoretical limit to the yield that could be achieved from a thermonuclear bomb (for example, by adding more stages), there were practical limits on the size and weight of weapons that could be carried by aircraft or missiles. The largest U.S. bombs had yields of from 10 to 20 megatons and weighed up to 20 tons. Beginning in the early 1960s, however, the United States built a variety of smaller, lighter weapons that exhibited steadily improving yield-to-weight and yield-to-volume ratios.

The AEC was headed by a five-member board of commissioners, one of whom served as chairman. During the late 1940s and early '50s, the AEC devoted most of its resources to developing and producing nuclear weapons, though it also built several small-scale nuclear-power plants for research purposes. In 1954 the Atomic Energy Act was revised to permit private industry to build nuclear reactors (for electric power), and in 1956 the AEC authorized construction of the world's first two large, privately owned atomic-power plants. Under the chairmanship (1961–71) of Glenn T. Seaborg, the AEC worked with private industry to develop nuclear fission reactors that were economically competitive with thermal generating plants, and the 1970s witnessed an ever-increasing commercial utilization of nuclear power in the United States.

- از همين جا شروع كنيم. گفتي رييس گروه نجوم آماتوري تيكو براهه هستي. اين گروه چند نفر عضو دارد و چه فعاليتهايي ميكند؟

- از همين جا شروع كنيم. گفتي رييس گروه نجوم آماتوري تيكو براهه هستي. اين گروه چند نفر عضو دارد و چه فعاليتهايي ميكند؟

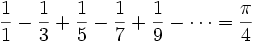

محاسبه میکردند.( d قطر دایره در نظر گرفته میشد )که در نتیجه مقدار تقریبی عدد پی 3.1605 بدست میآید.

محاسبه میکردند.( d قطر دایره در نظر گرفته میشد )که در نتیجه مقدار تقریبی عدد پی 3.1605 بدست میآید.

كار علمي هاوكينگ بر روي قوانين اساسي طبيعت است.

كار علمي هاوكينگ بر روي قوانين اساسي طبيعت است.